YOLOで腕のサインを利用

2025-01-28 python yolo raspi

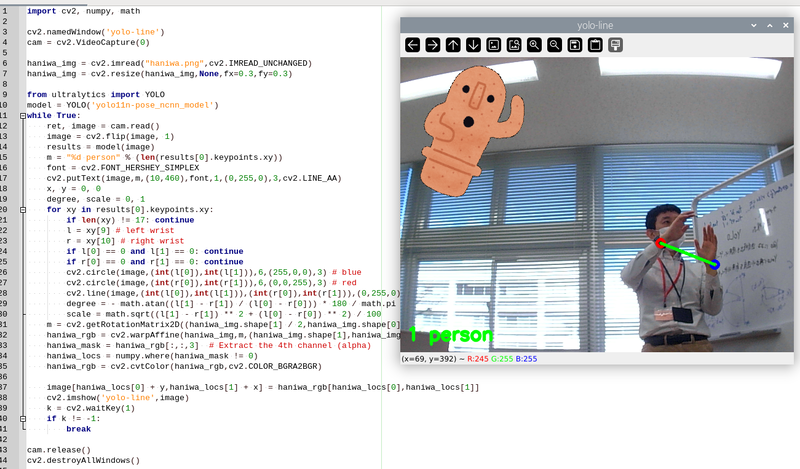

ポーズ推定で得られた特徴点のうち両手首の座標を活用する例です。

右手首と左手首の座標から、両手間の距離と角度を取得し、右上に表示されたハニワの画像を連動して拡大縮小・回転させます。OpenCVとの連携、三平方の定理や三角関数の活用など、高校生にぴったりの内容になってる…はず!

と思って作ったのですが、逆三角関数は大学の範囲ですね。。まあ、Pythonだとatan使えば簡単に求まるので良いかな…。

import cv2, numpy, math

cv2.namedWindow('yolo-line')

cam = cv2.VideoCapture(0)

haniwa_img = cv2.imread("haniwa.png",cv2.IMREAD_UNCHANGED)

haniwa_img = cv2.resize(haniwa_img,None,fx=0.3,fy=0.3)

from ultralytics import YOLO

model = YOLO('yolo11n-pose_ncnn_model')

while True:

ret, image = cam.read()

image = cv2.flip(image, 1)

results = model(image)

m = "%d person" % (len(results[0].keypoints.xy))

font = cv2.FONT_HERSHEY_SIMPLEX

cv2.putText(image,m,(10,460),font,1,(0,255,0),3,cv2.LINE_AA)

x, y = 0, 0

degree, scale = 0, 1

for xy in results[0].keypoints.xy:

if len(xy) != 17: continue

l = xy[9] # left wrist

r = xy[10] # right wrist

if l[0] == 0 and l[1] == 0: continue

if r[0] == 0 and r[1] == 0: continue

cv2.circle(image,(int(l[0]),int(l[1])),6,(255,0,0),3) # blue

cv2.circle(image,(int(r[0]),int(r[1])),6,(0,0,255),3) # red

cv2.line(image,(int(l[0]),int(l[1])),(int(r[0]),int(r[1])),(0,255,0),3) # green

degree = - math.atan((l[1] - r[1]) / (l[0] - r[0])) * 180 / math.pi

scale = math.sqrt((l[1] - r[1]) ** 2 + (l[0] - r[0]) ** 2) / 100

m = cv2.getRotationMatrix2D((haniwa_img.shape[1] / 2,haniwa_img.shape[0] / 2),degree,scale)

haniwa_rgb = cv2.warpAffine(haniwa_img,m,(haniwa_img.shape[1],haniwa_img.shape[0]))

haniwa_mask = haniwa_rgb[:,:,3] # Extract the 4th channel (alpha)

haniwa_locs = numpy.where(haniwa_mask != 0)

haniwa_rgb = cv2.cvtColor(haniwa_rgb,cv2.COLOR_BGRA2BGR)

image[haniwa_locs[0] + y,haniwa_locs[1] + x] = haniwa_rgb[haniwa_locs[0],haniwa_locs[1]]

cv2.imshow('yolo-line',image)

k = cv2.waitKey(1)

if k != -1:

break

cam.release()

cv2.destroyAllWindows()